I am using the following score function : def dice _coef(y_true, y_pre smooth=1): y_true_f = K. Here is a dice loss for keras which is smoothed to approximate a linear ( L) loss. It is also commonly used in image segmentation, in particular for comparing algorithm output against reference masks in medical applications. This simply calculates the dice score for each individual label, and then sums them together, and includes the background.

When monitoring I always keep in mind that the dice for the background is almost always near 1. See explanation of area of union in section 2). CategoricalAccuracy loss_fn = tf. I obtain a dice score of 0. What values are returned from model. Keras model evaluate() vs.

Input object or list of keras. Dice loss becomes NAN after some. See Functional API example below. String, the name of the model.

I worked this out recently but couldn’t find anything about it online so here’s a writeup. Understanding Ranking Loss, Contrastive Loss, Margin Loss, Triplet Loss, Hinge Loss and all those confusing names.

I have build a unet model for image segmentation when i train the model the dice score become greater than 1. Is there any explanation of this problem? Creating a weight map from a binary.

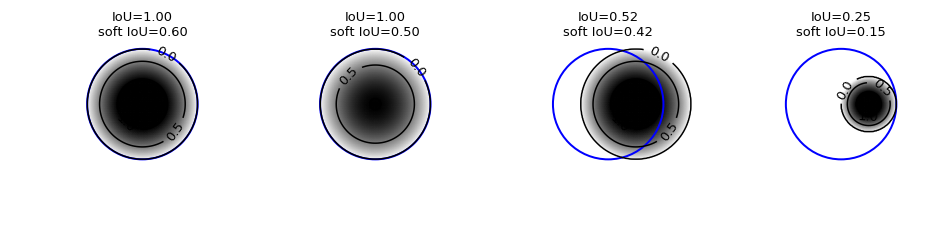

Get the latest machine learning methods with code. Browse our catalogue of tasks and access state-of-the-art solutions. Evaluation was performed with respect to true. What you can do instea is optimise a surrogate function that is close to the Fscore, or when minimised produces a good Fscore.

UPB-FOCAL is generate from the same model with focal loss function. This loss is usefull when you have unbalanced classes within a sample such as segmenting each pixel of an image.

For example you are trying to predict if each pixel is cat, dog, or background. You may have 80% backgroun 10% dog, and 10% cat. Should a model that predicts 100% background be 80% right, or 30%. For the global context, we train image-level classifiers for each class.

Such classifiers output the probability that a given object is present in the image. If the score of a class is above a given threshol then we compute the probability maps for.

Calculates how often predictions matches binary labels. Ground truth values.

Optional) Float representing the threshold for deciding whether prediction values are or 0. Loss(tf. keras.losses.binary_crossentropy, weights). You can vote up the ones you like or vote down the ones you don't like, and go to the original project or source file by following the links above each example. These are the loss. At the end of epoch we calculate the mean of dice scores which represent dice score for that particular epoch.

Lung segmentation was used to exclude extrathoracic detections. I submitted of the generated solutions and got the following leaderboard scores : Binary cross-entropy: 0. So again we see that focal loss and dice do a fair amount better than simple binary cross entropy.

This time the best result actually came from focal with γ=1.

Aucun commentaire:

Enregistrer un commentaire

Remarque : Seul un membre de ce blog est autorisé à enregistrer un commentaire.