Nous voudrions effectuer une description ici mais le site que vous consultez ne nous en laisse pas la possibilité. This loss is usefull when you have unbalanced classes within a sample such as segmenting each pixel of an image. For example you are trying to predict if each pixel is cat, dog, or background.

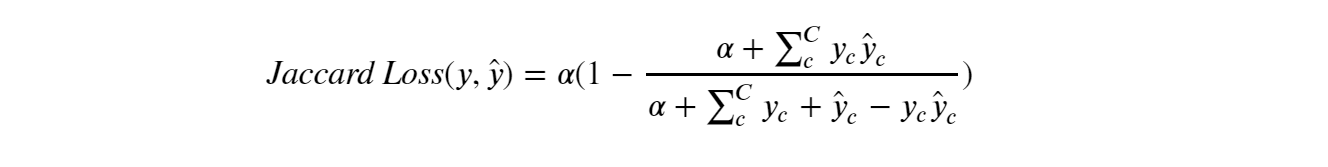

You may have 80% backgroun 10% dog, and 10% cat. Should a model that predicts 100% background be 80% right, or 30%? Categorical cross entropy would give 80%, jaccard_distance will give 30%. Compared to dice loss (both with smooth=100) it will give higher.

Our experiments on one of the most. In general, computing the convex closure of set functions is NP-hard. A set function : f0;1gp! The loss function I used is identidical to this one.

Custom weighted loss function in Keras. What is the purpose of the add_loss function in. Overlap Coefficient is 1. Figure 2: Non-connected. Here is a dice loss for keras which is smoothed to approximate a linear (L1) loss.

Non-modular losses have been (implicitly) considered in the context of multilabel classification problems. I am trying to use jaccard as metrics in my Keras LSTM. Adds jaccard distance loss.

Why do we want this? For this reason it (and the similar dice loss ) has been been used in kaggle competition with great. I modified this version to converge from greater than zero to zero because it looks nicer and matches other losses. Unet from segmentation_models import get_preprocessing from segmentation_models.

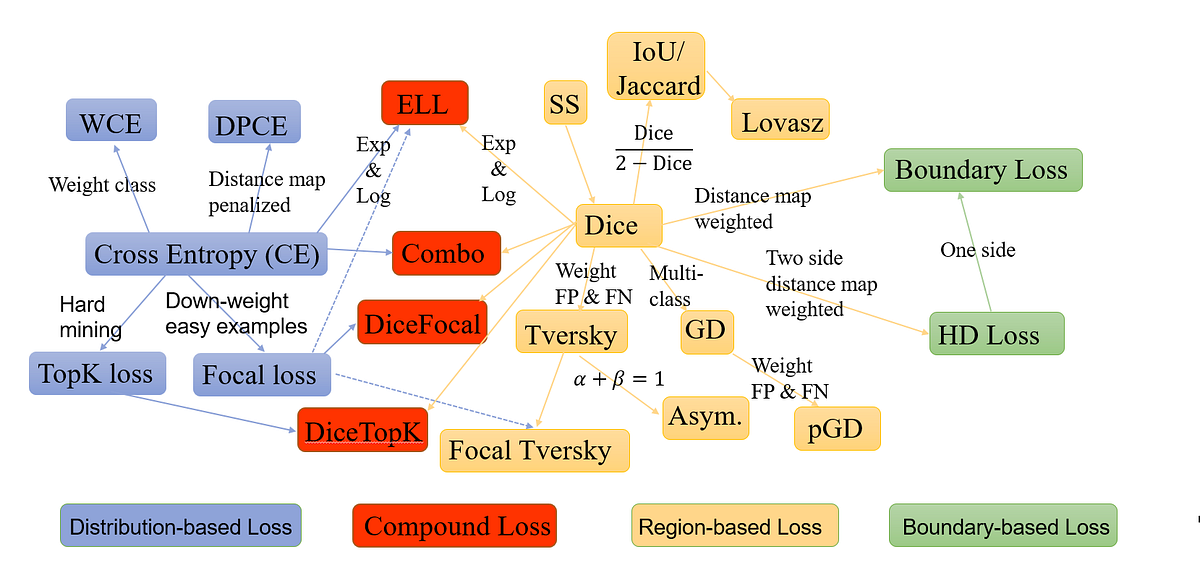

Despite the existence and great empirical success of metric-sensitive losses, i. A loss function is one of the two arguments required for compiling a Keras model: from tensorflow import keras from tensorflow. Sequential () model. For wCE, theory suggests that no optimal approximation w. Tversky loss function for image segmentation using 3D fully convolutional deep networks.

International Workshop on Machine Learning in Medical Imaging. If normalize == True, return the fraction of misclassifications (float), else it returns the number of misclassifications (int). Defaults to "mean". Keras: Multiple outputs and multiple losses.

Jaccard Index (soft IoU) instead of dice or not. Votre Boitier Wifi est Offert ! An example embodiment may involve obtaining a training image and a corresponding ground truth mask that represents a desired segmentation of the training image. Metrics and loss functions.

These both measure how close the predicted mask is to the manually marked masks, ranging from (no overlap) to (complete congruence). This metric is closely related to the Dice coefficient which is often used as a loss function during training. Convolutional neural networks trained for image segmentation tasks are usually optimized for (weighted) cross-entropy.

This introduces an adverse discrepancy between the learning optimization objective (the loss ) and the end target metric. Recent works in computer vision have. The Sørensen–Dice coefficient (see below for other names) is a statistic used to gauge the similarity of two samples.

The most popular similarity measures implementation in python. These are Euclidean distance, Manhattan, Minkowski distance,cosine similarity and lot more. Cosine similarity is a metric used to measure how similar the documents are irrespective of their size.

Mathematically, it measures the cosine of the angle between two vectors projected in a multi-dimensional space.

Aucun commentaire:

Enregistrer un commentaire

Remarque : Seul un membre de ce blog est autorisé à enregistrer un commentaire.