Pytorch development by creating an account on GitHub. Dice coefficient loss function in PyTorch. GitHub Gist: instantly share code, notes, and snippets. Is the dice loss commit merged yet?

Dice loss has not been merged yet. But you can use it as given above. Ive tried the code and it works. Hoever for binary segmentation I see to be getting betterwith BCE) Make sure to.

Hi All, I am trying to implement dice loss for semantic segmentation using FCN_resnet101. For some reason, the dice loss is not changing and the model is not updated. DataLoaderSegmentation import torch. SubsetRandomSampler from torch.

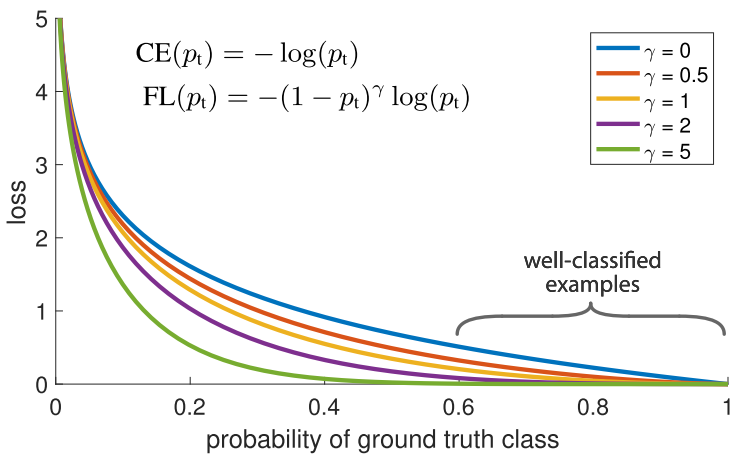

CE prioritizes the overall pixel-wise accuracy so some classes might suffer if they don’t have enough representation to influence CE. Now when you add those two together you can play around with weighing the contributions of CE vs. Dice in your function such that the result is acceptable. If you use PyTorch methods only, you won’t need to write the backward pass yourself in most cases.

To check, if the dice loss was working before, you could call backward on it and look at the gradients in your model. You could however write your own backward metho e. Dice -coefficient loss function vs cross-entropy2. This comment has been minimized. Hi I am trying to integrate dice loss with my unet model, the dice is loss is borrowed from other task.

Ask Question Asked year, months ago. Loss not decreasing - Pytorch. Active year, months ago. I am using dice loss for my implementation of a Fully Convolutional Network(FCN) which involves hypernetworks.

The model has two input. Maybe this is useful in my future work. To tackle the problem of class imbalance we use Soft Dice Score instead of using pixel wise cross entropy loss. At the end of epoch we calculate the mean of dice scores which represent dice score for that particular epoch.

Check out this post for plain python implementation of loss functions in Pytorch. Udacity PyTorch Challengers. Articles and tutorials written by and for PyTorch students… Follow.

Install PyTorch3D (following the instructions here) Try a few 3D operators e. It measures the loss given inputs x x and a label tensor y containing values (or -1). It is used for measuring whether two inputs are similar or dissimilar. Here are a few examples of custom loss functions that I came across in this Kaggle Notebook.

It provides an implementation of the following custom loss functions in PyTorch as well as TensorFlow. I hope this will be helpful for anyone looking to see how to make your own custom loss functions.

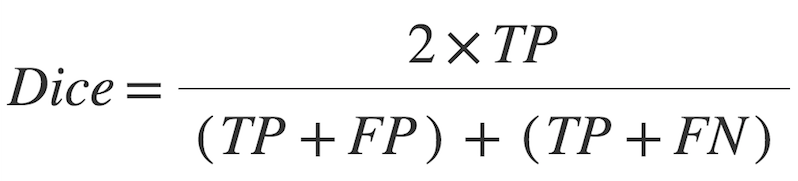

With respect to the neural network output, the numerator is concerned with the common activations between our prediction and target mask, where as the denominator is concerned with the quantity of activations in each mask separately. PyTorch implementation 3D U-Net and its variants: Standard 3D U-Net based on 3D U-Net: Learning Dense Volumetric Segmentation from Sparse Annotation Özgün Çiçek et al.

Residual 3D U-Net based on Superhuman Accuracy on the SNEMI3D Connectomics Challenge Kisuk Lee et al. Browse other questions tagged torch autoencoder loss pytorch or ask your own question. MSE,这样写的 ``` criterion = nn.

MSELoss(size_average=False).

Aucun commentaire:

Enregistrer un commentaire

Remarque : Seul un membre de ce blog est autorisé à enregistrer un commentaire.