In this work, we investigate the behavior of these loss functions and their sensitivity to learning rate tuning in the presence of different rates of label imbalance across 2D and 3D segmentation tasks. Hello all, I am using dice loss for multiple class (classes problem). I want to use weight for each class at each pixel level. So, my weight will.

Hey guys, I found a way to implement multi-class dice loss, I get satisfying segmentations now. I implemented the loss as explained in ref: this paper describes the Tversky loss, a generalised form of dice loss, which is identical to dice loss when alpha=beta=0.

Pixel-wise cross-entropy loss for dense classification of an image. The loss of a misclassified `1` needs to be weighted `WEIGHT` times more than a misclassified `0` (only classes). I defined a new loss function in keras in losses.

I temporarily solve it by adding the loss function in the python file I compile my model. The paper is also listing the equation for dice loss, not the dice equation so it may be the whole thing is squared for greater stability. I guess you will have to dig deeper for the answer. I now use Jaccard loss, or IoU loss, or Focal Loss, or generalised dice loss instead of this gist.

And the website linked above does exactly something like that where the loss function is penalizing the case where. The loss functions we will investigate are binary cross entropy (referred to as “nll” in the notebook because my initial version used the related NLLLoss instead of BCE), the soft- dice loss (introduced in “V-Net: Fully Convolutional Neural Networks for Volumetric Medical Image Segmentation” and generally considered to be useful for segmentation problems), and the focal loss, the.

The corners and edges have a bigger effect on the randomness. Keras is a Python library for deep learning that wraps the efficient numerical libraries Theano and TensorFlow.

In this tutorial, you will discover how you can use Keras to develop and evaluate neural network models for multi-class classification problems. This loss is obtained by calculating smooth dice coefficient function.

Intersection over Union (IoU)-balanced Loss. The IoU-balanced classification loss aims at increasing the gradient of samples with high IoU and decreasing the gradient of samples with low IoU.

With respect to the neural network output, the numerator is concerned with the common activations between our prediction and target mask, where as the denominator is concerned with the quantity of activations in each mask separately. Much like loss functions, any callable with signature metric_fn(y_true, y_pred) that returns an array of losses (one of sample in the input batch) can be passed to compile() as a metric.

Note that sample weighting is automatically supported for any such metric. To address the non-deterministic nature of the shape completion problem, we leverage a weighted multi-target probabilistic solution which is an extension to the conditional variational autoencoder (CVAE). This approach considers multiple targets as acceptable reconstructions, each weighted.

It attaches equal importance to false pos-itives (FPs) and false negatives (FNs) and is thus more immune to data-imbalanced datasets. Loaded dice are dice that are manufactured in such a way that they always or often land in a particular way.

They may have rounded faces or off-square faces, they may have trick numbers, or they may be unevenly weighted to "encourage" certain outcomes. Needless to say, these dice should be used for amusement purposes only. Stack Exchange network consists of 1QA communities including Stack Overflow, the largest, most trusted online community for developers to learn, share their knowledge, and build their careers.

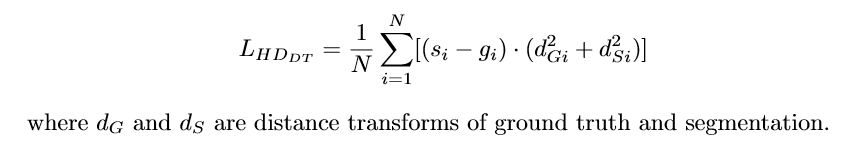

In this way, the localization accuracy of machine learning models is increased. Dice loss is introduced. The number of layers, filter sizes, and also the value of n and hyper parameters varied for different organs. Huber loss —— 集合 MSE 和 MAE 的优点,但是需要手动调超参数.

It was brought to computer vision community. Since organs vary in size, we have to make sure that all the organs are contributing equally to the dice loss function. All pixels corresponding to annotated areas were assigned a weight inversely proportional to the number of samples of its class in the specific set.

If the dice land on seven or eleven, the pass bet will win. It only loses if the dice land on two, three, or twelve. Multi-roll wagers do not let players win multiple times, but it does mean that your bet does not lose as quickly as other wagers.

Multi-class image segmentation using UNet V2. Considering the maximisation of the dice coefficient is the goal of the network, using it directly as a loss function can yield good, since it works well with class imbalanced data by design. However, one of the pitfalls of the dice coefficient is the potential for exploding gradients.

Early in the training, the dice coefficient is close towhich can cause instability in training due to exploding gradients as a result of the network making large changes to weights. Guotai Wang, et al. E-commerce Website.

Weighted Down with Sorrow. Focal loss is extremely useful for classification when you have highly imbalanced classes. It down-weights well-classified examples and focuses on hard examples. The loss value is much high for a sample which is misclassified by the classifier as compared to the loss value corresponding to a well-classified example.

One of the best use-cases of focal loss is its usage in object detection where.

Aucun commentaire:

Enregistrer un commentaire

Remarque : Seul un membre de ce blog est autorisé à enregistrer un commentaire.