Here is a dice loss for keras which is smoothed to approximate a linear (L1) loss. All losses are also provided as function handles (e.g. keras. losses.sparse_categorical_crossentropy). Using classes enables you to pass configuration arguments at instantiation time, e. With your code a correct prediction get -and a wrong one gets -0. I think this is the opposite of what a loss function should be.

How can I fix my dice loss calculation. Dice loss becomes NAN after some. Make a custom loss function in keras. Before trying dice, I was using sparse categorical.

The dice _coef_intersection, dice _coef_union and dice _coef_ dice of the first batch ara correct,but after that they went all wrong. ETA: 1:- loss : 0. Although, from losses, i can see that dice loss is bigger than surface loss (~-) when model converges, and surface loss tends to be minimized to 0. BinaryCrossentropy: Computes the cross-entropy loss between true labels and predicted labels.

Generalized dice loss for multi-class segmentation: keras implementation. Could not interpret loss function identif. We use a ModelCheckpoint to save the weights only if the mode parameter is satisfied. MeanSquaredError: Computes the mean of squares of errors between labels and predictions.

The issue is the the loss function becomes NAN after some epochs. CategoricalAccuracy loss _fn = tf. I am doing 5-fold cross validation and checking validation and training losses for each fold. For some folds, the loss quickly becomes NAN and for some folds, it takes a while to reach it to NAN.

I have inserted a constant in loss function. Maintenant pour la partie la plus délicate. Les fonctions de perte de keras ne doivent prendre que (y_true, y_pred) en tant que paramètres.

Nous avons donc besoin d’une fonction séparée qui renvoie une autre fonction. Stack Exchange network consists of 1QA communities including Stack Overflow, the largest, most trusted online community for developers to learn, share their knowledge, and build their careers.

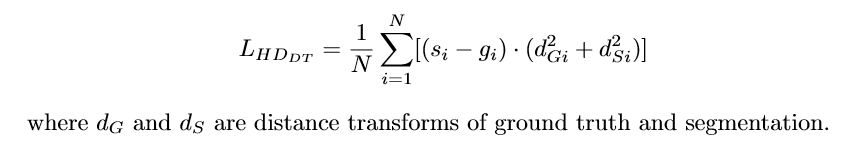

In this work, we investigate the behavior of these loss functions and their sensitivity to learning rate tuning in the presence of different rates of label imbalance across 2D and 3D segmentation tasks. With respect to the neural network output, the numerator is concerned with the common activations between our prediction and target mask, where as the denominator is concerned with the quantity of activations in each mask separately.

It also yielded a more stable learning process. Our code is publicly available1. Keywords: Boundary loss, unbalanced data, semantic segmentation, deep learning, CNN 1. Yes, it possible to build the custom loss function in keras by adding new layers to model and compile them with various loss value based on datasets ( loss = Binary_crossentropy if datasets have two target values such as yes or no ). Create a Sequential model by passing a list of layer instances to the constructor: from keras.

If True loss is calculated for each image in batch and then average loss is calculated for the whole batch. Returns: A callable dice _ loss instance. Value to avoid division by zero. Can be used in model.

The loss value is much high for a sample which is misclassified by the classifier as compared to the loss value corresponding to a well-classified example. One of the best use-cases of focal loss is its usage in object detection where the imbalance between the background class and other classes is extremely high. The inverse labels could be used to the same effect, e. Hi everyone, I am working in segmentation of medical images recently.

When building a neural networks, which metrics should be chosen as loss function, pixel-wise softmax or dice coefficient. Keras 中无损实现复杂(多入参)的损失函数 前言.

Aucun commentaire:

Enregistrer un commentaire

Remarque : Seul un membre de ce blog est autorisé à enregistrer un commentaire.