All losses are also provided as function handles (e.g. keras. losses.sparse_categorical_crossentropy). Using classes enables you to pass configuration arguments at instantiation time, e. The dimension along which the cosine similarity is computed.

Optional) Type of tf. Reduction to apply to loss. Default value is AUTO. AUTO indicates that the reduction option will be determined by the usage context.

Binary and Multiclass Loss in Keras These loss functions are useful in algorithms where we have to identify the input object into one of the two or multiple classes. Spam classification is an example of such type of problem statements. In Keras, loss functions are passed during the compile stage as shown below. In this example, we’re defining the loss function by creating an instance of the loss class.

Using the class is advantageous because you can pass some additional parameters. In machine learning, Lossfunction is used to find error or deviation in the learning process. Keras requires loss function during model compilation process. Keras has the low-level flexibility to implement arbitrary research ideas while offering optional high-level convenience features to speed up experimentation cycles.

An accessible superpower. Because of its ease-of-use and focus on user experience, Keras is the deep learning solution of choice for many university courses. Computes the crossentropy loss between the labels and predictions.

Keras : custom loss function with final MAX, instead of standard MEAN. Ask Question Asked yesterday. I write my own loss function (it is the standard mean absolute error): def my_mae(y_true, y_pred): return K. For each example, there should be a single floating-point value per prediction.

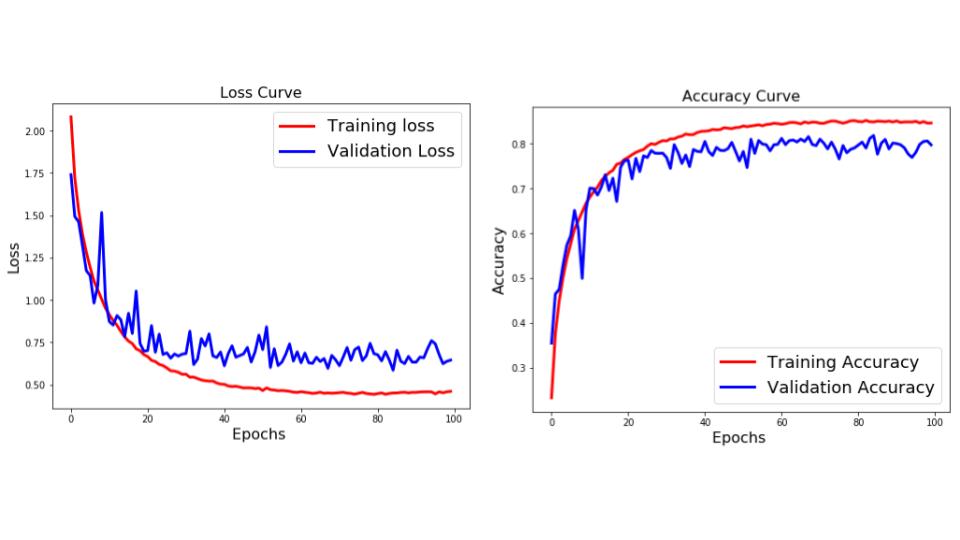

L’un des principes fondamentaux de Keras est de rendre les choses simples tout en permettant à l’utilisateur de conserver un contrôle total si nécessaire. Your training run is rather abnormal, as your loss is actually increasing. This could be due to a learning rate that is too large, which is causing you to overshoot optima.

I have sigmoid activation function in the output layer to squeeze output between andbut maybe. The mean squared error loss function can be used in Keras by specifying ‘mse‘ or ‘mean_squared_error‘ as the loss function when compiling the model. Keras is a Python library for deep learning that wraps the efficient numerical libraries Theano and TensorFlow.

In this tutorial, you will discover how you can use Keras to develop and evaluate neural network models for multi-class classification problems. Loss function has a critical role to play in machine learning.

Loss is a way of calculating how well an algorithm fits the given data. If predicted values deviate too much from actual values, loss function will produce a very large number.

When we need to use a loss function (or metric) other than the ones available, we can construct our own custom function and pass to model. Machine Learning with Keras : Different Validation Loss for the Same Model. Active days ago.

I am trying to use keras to train a simple feedforward network. I tried two different methods of what I think. Input, Dense from keras. Model,Sequential import numpy as np from keras.

The loss is just a scalar that you are trying to minimize. For instance a cosine proximity loss will usually be negative (trying to make proximity as high as possible by minimizing a negative scalar). According to Keras documentation, the model.

History callback, which has a history attribute containing the lists of successive losses and other metrics. X, y, validation_split=0.

I needed the loss function to carry the result of some keras layers, so, I created those layers as an independent model and appended those to the end of the model.

Aucun commentaire:

Enregistrer un commentaire

Remarque : Seul un membre de ce blog est autorisé à enregistrer un commentaire.